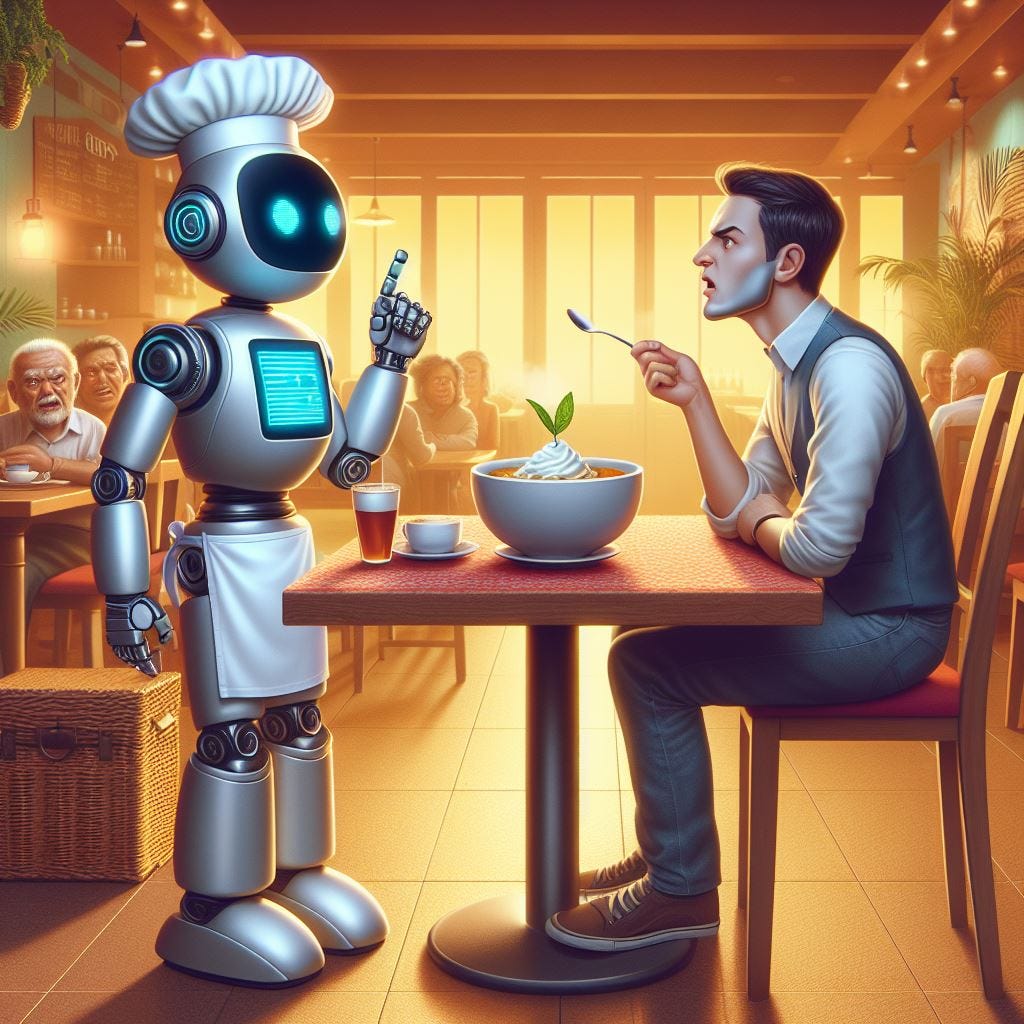

Waiter, there's an AI in my soup!

Right when we were trying to get rid of Facebook and Google's cookies and trackers, now AI systems will start reading our emotions to sell us stuff

A couple of days ago, I watched a tech influencer’s reel celebrating how the Instituto Tecnológico de Aragón (Aragón’s Institute of Technology, Spain) had succeeded in using AI to analyze the emotional response of people at a dining table to the food and service they received1.

It immediately brought to mind how potentially dangerous things can turn out if we leave researchers, governments and for-profit organizations to their own devices and appetites in everything related to the use of AI.

It wasn’t long before media outlets started praising the concept. El Heraldo (Spain) explained how this AI system could be used to analyze in real-time the response of diners to the replacement of more expensive ingredients with cheaper ones2.

While La Vanguardia (Spain) makes noteworthy the idea of concealing the required 360º camera in a vase-like device in the middle of the table to collect the diners’ reactions while they eat3.

El País (Spain) participated in one of the experiments, taking very interesting pictures and opinions from the researchers themselves4.

What if I don’t want to opt in?

In March of this year, the European Union finally passed its Artificial Intelligence Act, and within it, strict regulations and definitions of Prohibited AI Practices within their jurisdiction (check my recent article on that topic)5.

In its Chapter II: Prohibited Artificial Intelligence Practices, the EU AI Act explicitly forbids the use of AI to infer emotions in the workplace and education institutions.

the placing on the market, the putting into service for this specific purpose, or the use of AI systems to infer emotions of a natural person in the areas of workplace and education institutions, except where the use of the AI system is intended to be put in place or into the market for medical or safety reasons.

But it says nothing about the use of AI systems aimed to infer emotional responses in public areas like restaurants and commerces, with the intent of marketing and product research, without the consent of the people involved.

The developers of an AI system created with such purpose only need to register it within the European Union High Risk AI Systems Database and obtain the proper certification to market it in the EU jurisdiction.

So, in theory, the AI use case explored by the Instituto Tecnológico de Aragón is valid, and it doesn’t require an explicit authorization from the diners involved.

A peek into our (potential) future

This situation reminds me of an interesting situation proposed by the sci-fi series, Babylon 5.

In the future depicted by Babylon 5, negotiations of all sorts (commercial, government, private, etc.) were allowed to happen with the presence of a “Commercial Telepath”6.

Commercial Telepaths were there to validate if the parts in a negotiation were being truthful, by directly reading their minds during the process.

It was a government sanctioned and regulated profession, and it was seen as the natural way to do things.

Now we live in an era where we might be subject to constant analysis by AI-enabled tools that will give an additional edge to businesses, governments and organizations.

Power asymmetry increases in favor of organizations, and in detriment of the individuals.

Right when we are fighting hard to recover our right to privacy while browsing the Internet, by stopping the all-pervasive marketing trackers placed into us by Facebook, Google and others.

The Road Ahead

I’m a tech enthusiast, as any good Software Engineer should be. I’ve been involved in AI stuff for at least 25 years. And I think it will be an important part of our future.

But it is our responsibility, as individuals, as responsible citizens, to keep things under control.

The Lifecycle of AI systems involve those with some AI-based idea, trying to make it happen, those who are going to acquire and deploy the AI system for their commercial benefit, and those who are going to be affected by the use of such AI.

It doesn’t matter where we are located in the AI market spectrum (creators, deployers, users, or regulators) we have to keep ourselves aware and demand that every advancement happens in our benefit.

This might mean proposing that the protections the AI regulations entail also cover commercial situations like the ones depicted in this post.

What do yo think about this?

Let me know in the comments below!

When you are ready, here is how I can help:

“Ready yourself for the Future” - Check my FREE instructional video (if you haven’t already)

If you think Artificial Intelligence, Cryptocurrencies, Robotics, etc. will cause businesses go belly up in the next few years… you are right.

Read my FREE guide “7 New Technologies that can wreck your Business like Netflix wrecked Blockbuster” and learn which technologies you must be prepared to adopt, or risk joining Blockbuster, Kodak and the horse carriage into the Ancient History books.

References

Éxito de pruebas de la mesa sensorial del Centro de Innovación Gastronómica de Aragón

Una mesa sensorial desarrollada en Aragón ha creado el primer algoritmo que detecta las emociones de gente comiendo

Así funcionará la mesa sensorial que medirá las emociones de los comensales

La mesa sensorial que mide las emociones de los comensales mientras comen

Prohibited Artificial Intelligence Practices in the European Union

https://alfredozorrilla.substack.com/p/prohibited-artificial-intelligence-practices-eu

Commercial Telepath - Babylon 5

I agree with you, this resonated a lot: "I’m a tech enthusiast, as any good Software Engineer should be. I’ve been involved in AI stuff for at least 25 years. And I think it will be an important part of our future.

But it is our responsibility, as individuals, as responsible citizens, to keep things under control." I feel the same way. I recently wrote a post about BCIs, and this thought was on my mind the whole time I was writing. Would love to add your thoughts: https://open.substack.com/pub/karozieminski/p/the-20-questions-i-have-about-brain