Trustworthy Artificial Intelligence (Part 3)

Realisation of Trustworthy AI: What needs to happen so we can build a Trustworthy AI from the ground up

This is a four part series. This one is the third part of the series. You can find the others here:

In my previous articles I’ve begun exploring the approach taken by the European Union towards the definitions and regulations for the development of a Trustworthy Artificial Intelligence1.

If we are to delegate with confidence important tasks to Artificial Intelligence systems, we need to make sure those are trustworthy, from their design, through their implementation to their operation and maintenance.

But, as we have already analyzed, it is necessary to globally agree on what it means to be “trustworthy”, because Artificial Intelligence systems will be cross-border applications.

If every jurisdiction has different regulations and requirements, it will make very difficult for AI developers to offer their services in an homogeneous way, potentially creating markets where this kind of technology might not be available at all, or just be available in a limited fashion, creating undesired inequalities and disadvantages.

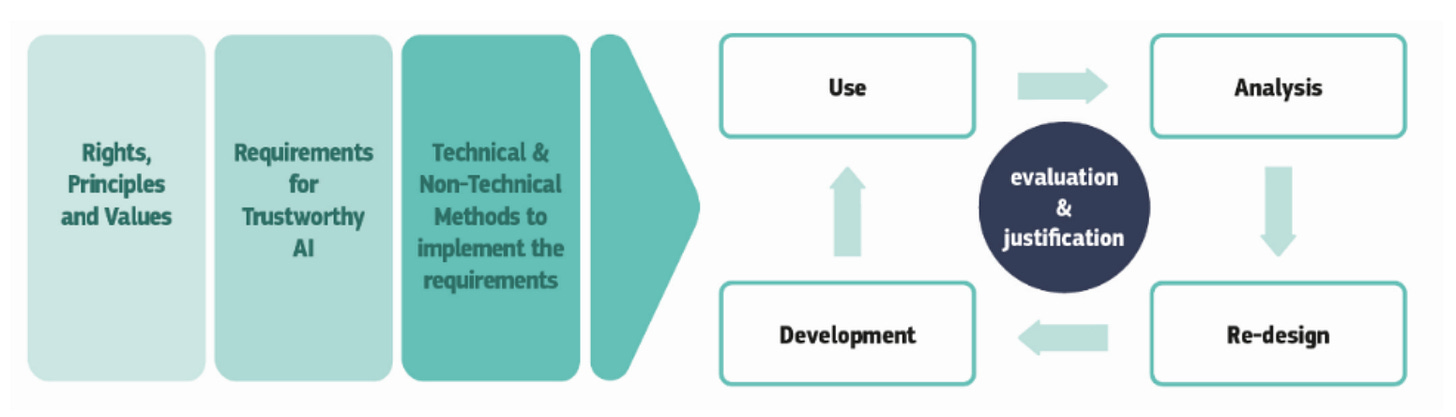

The European Union has started this discussion from the abstract Fundamental Rights present in its charter, moving down to principles, then requirements for its real-world realisation and, finally, ways to assess the technology while being developed and once in operation.

In this article we will discuss the requirements that need to be satisfied by any Artificial Intelligence so it can be deemed trustworthy, and the methods of realisation to achieve that (both technical and non-technical).

Requirements of Trustworthy AI

Trustworthiness in Artificial Intelligence must cover the whole lifecycle of its existence: design, development and operation. It is not enough to perform quality control of the final product, because so many things can go wrong during the design and development, and won’t be discernible until it is too late (when the damage has already been done).

According to the European Union expert group, four types of stakeholders will participate in this process, to help guarantee an AI is trustworthy:

Designers/Developers: This group is responsible of designing and building the AI tools, and should make sure to follow the guidelines and regulations already in place.

Deployers: This group is responsible of selecting and deploying AI tools for use in their organizations or by the general public. They should make sure those tools have been designed and built according to the guidelines and regulations.

Users: This group makes direct use of the AI tools, so they are responsible of demanding these tools are compliant.

Broader society: This group is not a direct user of the AI tools, but as with the Users, they are responsible of exerting pressure in the Deployer and Designers/Developers group, so those tools are compliant.

According to the EU Expert Group on Ethical and Trustworthy AI, the following are the minimum requirements for guaranteeing an AI software can be considered Trustworthy:

Human Agency and Oversight

Technical Robustness and Safety

Privacy and Data Governance

Transparency

Diversity, Non-Discrimination and Fairness

Societal and Environmental Wellbeing

Accountability

Each of those are of equal importance, but as we discussed in my previous article, it is possible for them to be at odds in certain situations. If that happens, careful decision making will be necessary, to ensure the result is the best possible under those circumstances.

We will review each one of them.

1. Human Agency and Oversight

We have seen that “Respect for Human Autonomy” is one of the principles that need to be protected during the development of AI systems. This means that AI should not be allowed to take decisions or actions on its own, ignoring the required human agency and oversight.

Fundamental Rights

We are entering the Era of AI. The way society works, our jobs, our personal relationships are going to be extensively affected, just as it happened during the previous Industrial Revolutions.

We cannot predict all the possible scenarios where the usage of AI can be perjudicial to human beings, affecting our Fundamental Rights, so it is necessary to stay aware and vigilant, to ensure any issue that arises is dealt with promptly.

Governments, Private Sector, Academia and the General Public must keep their eyes open, especially during this initial period, where we have lots of questions and so little answers.

Human Agency

Human Agency means we are the users of AI, and not the other way. AI systems must support human efforts, and we humans need to have the final say in the decision of using or not whatever the AI produced.

We have seen situations where software systems have been used to manipulate population (like the Cambridge Analytica scandal). AI systems can make this kind of undesirable behavior easier to achieve, so active vigilance needs to be established to prevent it.

Human Oversight

Human Oversight means that we, as humans, are always “in the loop”. It must not be allowed the creation of systems where the AI decisions cannot be monitored, evaluated and if necessary, overridden by human operators.

An example of this is the prevention of the development and deployment of Lethal Autonomous Weapon Systems (LAWS). Think of an automated machine gun turret, AI-powered, that can decide when to kill and when not to. Or the deployment of “killer robot dogs2” (like those cute quadruped mechanical “dogs” we have already seen, but armed with lethal weapons and with the ability to act by themselves, without human oversight).

2. Technical Robustness and Safety

Trustworthy AI requires that any AI system developed upholds the “Principle of Prevention of Harm”. Technical Robustness means that the AI systems are designed and developed in a way that no intended nor unintended harm can come from its utilization.

Furthermore, once deployed, AI systems must be assessed constantly to ensure they still operate under the assumptions they were designed for, and if those environmental assumptions have changed, they must be adjusted accordingly.

Resilience to Attack and security

Artificial Intelligence systems will be subject to malicious attacks. They have already been in the past (just remember what happened to Microsoft’s Tay experiment, who became a racist and offensive chatbot in less than a day of being online3, because of its interactions with the people.

Adversarial Painting4 is a technique that allows attackers to create patterns that make impossible for Computer Vision systems to identify correctly elements they have been trained for. Adversarial Patterns applied to a road marker, for example, can cause accidents on AI-powered vehicles, because it won’t be able to recognize them.

A Risk Management approach needs to be taken, to protect the data and operation of the AI systems, avoiding tampering. Also constant monitoring of the systems health and assumptions must be done, to perform proactive adjustments before harm can be done.

Fallback Plan and General Safety

AI systems will be utilized in situations where harm can come in case of malfunction. Fallback systems must be in place, that allow a human to take over, or a more traditional (and proven) system can provide a validating input.

We are at the dawn of AI. While we gain real world experience of the application of AI to everyday processes, we must ensure the proper risk management of those situations: the greater the risk, the greater the security measures included by design.

Accuracy

It is well known that the current iteration of Artificial Intelligence systems, based on Large Language Models (LLMs) can be prone to have “hallucinations”: constructing an answer that can be statistically probable, but is completely wrong.

Future Artificial Intelligence paradigms, perhaps closer to the promised Artificial General Intelligence (AGI), will be able to be more precise, to properly work around conjectures and have a general world model that will allow it to have the “common sense” that it sorely lacks today.

In the meantime, we need to have in place methods and processes to validate the correctness of the answers received, and reduce the risk of acting on inaccurate information.

Reliability and Reproducibility

Results generated by Artificial Intelligence systems should be reliable and reproducible. Reliability means AI systems are capable of producing results under every circumstance required of it, while Reproducibility means the AI system is capable of producing the same behavior under the same conditions.

3. Privacy and Data Governance

Privacy is one of the Fundamental Rights recognized by the European Union charter, and the advent of AI systems pose an undeniable risk to this right, and the “Principle of Prevention of Harm”. The same can happen as a product of improper Data Governance.

Privacy and Data Protection

Artificial Intelligence systems are capable of sifting through enormous amounts of data, both during their training process and operation.

This data can come from many sources, and some of them might include personal or sensitive information, that after the model creation will become accessible to anyone who requests it.

Copyrighted works, either texts, images, music, etc. can also be processed by Artificial Intelligence systems as part of their training, enabling them to create similar works that, without being a derivative themselves, could be considered a copyright infringement.

This is currently a matter of intense debate, and several instances of undesired events have already occurred5 because of poor personal and identifying data management, and copyright infringement.

Quality and Integrity of Data

Data collected for AI training can have biases, and those biases will become part of the system’s answers and results. Special care must be taken to avoid that from happening, as it can be difficult to fix once the model is in operation.

Even worse situations can arise with AI systems capable of automated learning, as those can acquire improper information, perhaps maliciously fed into them. As mentioned before, we already have examples of this (the Microsoft’s Tay scenario).

Access to Data

If an Artificial Intelligence system is going to be fed with confidential and sensitive information (like personal data), it must be designed, developed and deployed in a way that guarantees proper data access protocols are followed.

4. Transparency

The “Principle of Explicability” makes necessary that any AI system is transparent about its data, its algorithms, design, construction, and any business model it might follow.

Traceability

Given the current state of AI, and the way LLMs work, it is still very necessary to validate the answers they provide, or we risk acting on “hallucinations”.

Traceability of the way an answer was achieved, through the algorithms and all the way to the data sources, is required for a Trustworthy AI.

This way it will be possible to pinpoint errors, fix the causes, and avoid future occurrences of the same.

Explainability

Humans and AI systems will work together to produce results for the organizations employing them. As such, the concept of Explainability must cover both parts: AI and Human.

Technical Explainability in some cases is at odds with the capabilities or performance of the AI system (as it introduces complexities in its design that can make it slower, less powerful and harder to maintain). But it is necessary if we are going to be able to trust its results.

In the other hand, it is also necessary to provide transparency on which parts of an organization’s decision processes are affected by AI systems, and why it has been deemed conveniente to use them.

Communication

As humans, we have the right to know when we are interacting with an AI system or another human. Current AI systems might not be able to fully impersonate a human being, but that might change soon.

And even while knowingly interacting with an AI system, we have the right to know what are its limitations and capabilities, so we can better choose how and when to use the answers and knowledge provided, minimizing risks.

5. Diversity, Non-Discrimination and Fairness

Inclusion and Diversity regarding AI systems shouldn’t be an afterthought, but be present in the whole lifecycle of its creation and operation. This will help uphold the “Principle of Fairness”.

Avoidance of Unfair Bias

AI systems can be vulnerable to have unfair biases caused by biased data ingestion or biased algorithms (it has already happened, as can be seen in this MIT Technology Review article6, which narrates how an AI-powered Police Station had to deactivate its system because of racial bias).

The best way to prevent this from happening is to have processes in place that remove unwanted biases from the data, and also diverse hiring processes, as it will make harder for these biases to go inadvertently into the final product.

Accessibility and Universal Design

AI systems will be used by many diverse population groups, so Accessibility must be kept in mind during its whole lifecycle. Universal Design as a concept and guidance is useful to achieve this goal.

Stakeholder Participation

Everyone involved in the AI system lifecycle should participate in some way in its design, to make sure it is built according their needs and doesn’t pose a threat or risk.

In the same way, once it is in operation, feedback from those stakeholders will be key to keep improving it over time, adding functionality or fixing things that doesn’t work.

6. Societal and Environmental Wellbeing

AI systems can have both a positive and negative impact in society, the environment and the general wellbeing of mankind, both present and future. It is important to ensure the “Principles of Fairness and Prevention of Harm” are considered during its lifecycle.

Sustainable and Environmentally Friendly AI

AI systems will enable us to perform analysis beyond our current capabilities, and this will help us to tackle some of the hardest problems we are facing today, like our response to the Climate Change.

But, at the same time, AI systems consume enormous amounts of processing power both for their training and operation, and that can also become a problem on itself.

Social Impact

AI systems are already permeating many aspects of our society: students and teachers using them to create content, lawyers using them to summarize documents, businesses using them to serve online customers, etc.

And this is only the beginning. We must try to predict the potential impact, to avoid it destroys vital elements in our society, like social skills, relationships, agency, etc.

Society and Democracy

AI systems can also be negatively used to manipulate populations, especially during electoral processes. It is important to monitor their usage to avoid this from happening.

7. Accountability

The “Principle of Fairness” brings as a necessity the requirement of Accountability. It is necessary to provide accountability for every part of the lifecycle of the AI systems we develop, if we are to have Trustworthy AI.

Auditability

Auditability is key for a Trustworthy AI, as it allows the assessment of an AI system’s data, processes, algorithms, etc. It not necessary to openly expose sensitive information like business models, data models, weights, etc. Those can be assessed using proper access methodologies, and for the routine processes, Observability techniques can be used.

Minimization and Reporting of Negative Impacts

AI systems and their controlling organizations must not be able to prevent users, employees, stakeholders or anyone who detects a misbehavior in the AI to report it to the proper channels in place.

The focus must be on minimizing the potential damage caused by an AI malfunction, by enabling a fast and streamlined way to report it, so proper actions can be taken immediately.

Trade-offs

As mentioned before, there will be situations where tensions will arise between those requirements. If a principle or requirement is given precedence over another, it must be done in a reasoned and well documented way, because at some point it might be necessary to support that decision.

Those trade-offs must be analyzed from the ethical and risk management perspectives, and if it results impossible to solve the situation in an ethically acceptable way, the development or deployment must be aborted.

Redress

Important for the trustworthiness of an AI system is the knowledge in advance that adequate mechanisms for redress exist, in case a negative impact occurs.

Technical and Non-Technical Methods to Realise Trustworthy AI

Artificial Intelligence systems might not be new as a technology, but their introduction to many business domains is definitely a recent occurrence.

The way in which AI systems are developed is also constantly evolving, to adapt to new challenges found along the way, or make use of new advantages and capabilities of hardware, algorithms, etc.

And the AI systems themselves have also been evolving, at a breakneck speed, posing a challenge to organizations trying to decide which option available to adopt for their business purposes.

Both models are following a cyclical-iterative process, which require constant assessments to keep improving over time and not being left behind. But at the same time, this constant assessments should try to increase AI trustworthiness.

Trustworthy AI can be realised by both Technical and Non-Technical methods and we will briefly mention some of them, that are in no way exhaustive.

Technical Methods

The Technical Method to ensure a Trustworthy AI must be ingrained in the whole AI system lifecycle: design, development, deployment and operation.

Architectures for Trustworthy AI

Trustworthy AI systems will have the requirements directly integrated into it from its design and architecture, thus making impossible for it to enter undesired states or to provide inappropriate answers.

AI systems capable of continuous and unsupervised learning are at risk of acquiring by themselves data and training that will produce undesired results. To avoid this, they must be able to identify those rogue elements and states during their “Sense-Plan-Act” cycle, and filter them out accordingly.

Ethics and Rule of Law by Design (X-by-Design)

In the same way that several “by-Design” methods have been adopted (like “Privacy-by-Design”, or “Security-by-Design”), it is necessary to adopt a Trustworthy-by-Design and Ethics-and-Rule-of-Law-by-Design.

This approach will ensure compliance at every level of the AI system lifecycle.

Explanation Methods

If we are going to trust an AI system’s result, we need to be able to explain how it arrived to that answer. This need has fostered the development of a new field of research: Explainable AI (XAI).

XAI employs diverse programming techniques during the design and construction of AI systems that allows to trace the flow of “reasoning” that leads to a specific answer (for example, identifying which “neurons” fire up as a result of the prompt requested).

This is very important for the current iteration of AI systems, based on LLMs trained using huge amounts of data, because a small variation in a neural network weight can produce a highly different type of answers, and it is necessary to properly map this process.

Testing and Validating

AI systems based on the current trend (LLMs) are non-deterministic. This means that for the same input they can produce different answers (how different it will be is a matter of certain parameters, like “temperature” (randomness level).

This means that common deterministic testing techniques for software are not enough. Tests and validations must not only happen during the design and construction phases, but also during the whole operation phase, because changes in the context where the AI is used can lead to unexpected results, that might need to be dealt with.

Quality of Service Indicators

Software systems commonly are developed while trying to achieve certain Quality of Service (QoS) Indicators, that measure performance, security, reliability, etc.

AI systems should have all of these, and also QoS indicators around the accuracy level of the answers they provide, and all the trustworthiness parameters we have mentioned so far and more.

Non-Technical Methods

Non-Technical methods for realising and maintaining Trustworthy AI systems are also necessary during their whole lifecycles. The EU Expert Group on AI listed the following:

Regulation

Regulations that will allow AI to be trustworthy are starting to appear all around the world. But they will need to be revised constantly, as the AI landscape evolves (and it is evolving rapidly).

Codes of Conduct

Not only governments can be held responsible of building and deploying Trustworthy AI: corporations can bring trustworthiness concepts into their internal Codes of Conduct too.

Standardisation

Many standards exist in different industries, and those help to manage expectations about quality, performance, features, etc.

Eventually the AI landscape will adopt Trustworthy AI standards that will be adopted by the different stakeholders, making it easier for everyone to create and use the AI systems without tinkering with individual regional regulations.

Certification

Along with Standardization, we can expect Certification to become one of the tools to achieve widespread adoption of Trustworthy AI practices, during the whole AI systems lifecycle.

Accountability via Governance Frameworks

Certification and Standardization help prove to external stakeholders that the AI systems developed or deployed are compliant with Trustworthy AI best practices.

But the organization should have an internal governance frameworks, with executives at the proper level responsible of the ongoing compliance, and board members related to Ethics in AI, if necessary.

Also keeping in touch with oversight groups and public stakeholders that might provide guidance or external experience can be useful.

Education and Awareness to Foster an Ethical Mindset

Trustworthy AI is responsibility of all the stakeholders: designers, developers, deployers, users, etc. This means everyone needs to be literate about the importance of AI Ethics and Trustworthiness, so they can fulfill their role in keeping AI systems compliant.

Stakeholder Participation and Social Dialogue

Learning from everyone involved about their perspectives on the use of AI will be a key factor to create AI systems that benefit the most the whole society.

This requires a widespread stakeholder participation and dialogue, which must be either led by governments or interested parties, like the organizations developing or deploying AI tools.

Diversity and Inclusive Design Teams

As we mentioned before, it is very important to have diverse and inclusive design and implementation teams. Having gender, culture, age, ability, professional, etc. diversity can help to detect, avoid and correct biases that could appear during the AI system lifecycle, helping to increase its trustworthiness.

What comes next

Stay tuned, as I’ll be posting the fourth part “Assessing Trustworthy AI” on my next article!

Thank you for reading my publication, and if you consider it is being of help to you, please share it with your friends and coworkers. I write weekly about Technology, Business and Customer Experience, which brings me lately to write a lot also about Artificial Intelligence, because it is permeating everything. Don’t hesitate in subscribing for free to this publication, so you can keep informed on this topic and all the related things I publish here.

As usual, any comments and suggestions you may have, please leave them in the comments area. Let’s start a nice discussion!

When you are ready, here is how I can help:

“Ready yourself for the Future” - Check my FREE instructional video (if you haven’t already)

If you think Artificial Intelligence, Cryptocurrencies, Robotics, etc. will cause businesses go belly up in the next few years… you are right.

Read my FREE guide “7 New Technologies that can wreck your Business like Netflix wrecked Blockbuster” and learn which technologies you must be prepared to adopt, or risk joining Blockbuster, Kodak and the horse carriage into the Ancient History books.

References

Ethics guidelines for trustworthy AI

https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

'Killer robots' pose a moral dilemma

https://www.dw.com/en/killer-robots-autonomous-weapons-pose-moral-dilemma/a-41342616

Twitter taught Microsoft’s AI chatbot to be a racist asshole in less than a day

https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Can this makeup fool facial recognition?

Google was accidentally leaking its Bard AI chats into public search results

Predictive policing algorithms are racist. They need to be dismantled.