Trustworthy Artificial Intelligence (Part 1)

Can Generative Artificial Intelligence be Trustworthy?

This is a four part series. This one is the first part of the series. You can find the others here:

Would you put your life in the hands of a ChatGPT-like Artificial Intelligence decision? A medical decision? A legal decision? Can AI, as we have it today, be trustworthy? What means to be trustworthy?

You would be surprised to know that we are already placing life and death decisions on software systems, all the time. But those are carefully selected situations, where those systems were exhaustively engineered to provide better results than the humans they were replacing.

Think of the control systems in our latest aircraft, where several computers and humans “vote” in a fraction of a second about a maneuver being attempted. The human input determines the intent, but the machines decide how to do it… or if it gets done at all. The aircraft sensors, plugged to the computers, perceive the surroundings faster than humans, and can take a life and death decision to avoid deadly mistakes.

But fatalities caused by aircraft computers misjudging certain situations have already happened (like the Lion Air Flight 610 and others1). This, of course, sparked public outcry and the grounding of the airlines and aircraft models affected. An immediate investigation was launched, to find the root causes and fix them. It became necessary to explain how the computers arrived to the fatal decisions, if those planes were to fly again.

At the same time, we have had a lot of those software systems working reliably for decades, controlling tirelessly, day and night: nuclear reactors, medical ICU hardware, and more recently, some cars, amongst many other things. They just do it better than us.

So, why is Artificial Intelligence any different? This onion, like ogres, has many layers. Let’s peel them one by one.

Current Artificial Intelligence is not THE Artificial Intelligence

Contrary to what most people think, the current type of “artificial intelligence” we have right now doesn’t lead by itself into the common concept of an Artificial Intelligence that sci-fi has planted in everyones’ minds: A computer system capable of “thinking like a human”, one we can have intelligent conversations with, and that can be considered intellectually our equal, to the point of even endangering mankind.

I’ve written extensively about this in my previous post “Artificial Intelligence for Customer Experience2” (you can check it there), but I’m going to do a short summary here anyways.

Computer Scientists have established two categories to classify Artificial Intelligence systems:

Narrow AI (also known as Weak AI)

General AI (also known as Strong AI)

Narrow AI has been with us for some time already. Computer Vision systems, like the ones present on Tesla vehicles, capable of navigating through crowded streets. Natural Language Processing systems, like all the translation systems that have been broadly using. Medical Image Processing, now capable of detecting medical conditions far beyond what the expert human eye is capable by itself. And many more.

Narrow AI, by definition, is capable of doing things that we humans do everyday, but faster, more efficiently, and sometimes can do things beyond our capabilities. But only that specific thing. It doesn’t “think”. It cannot generalize nor create conjectures (like Noam Chomsky brilliantly stated on his New York Times article “The False Promise of ChatGPT3”).

In the other hand, General AI (that doesn’t exist yet) is expected to be able to show cognitive capabilities similar to humans, in the results it achieves. As E. Dijkstra, one of the fathers of AI, eloquently said: “The question of whether machines can think is about as relevant as the question of whether submarines can swim”. This means that it is very unlikely that the way machines will achieve AI is going to look like the way we achieve our own intelligence (another question we need to answer yet).

So, why all the ruckus about ChatGPT and the downfall of the Human Society because of it? Well, it is mostly caused by badly informed tech evangelists, marketing people without technology background and unscrupulous media. All of them trying to profit from the hype, of course.

ChatGPT, Bard, etc. belong to a category of Machine Learning systems implemented using a technique called Generative Pretrained Transformers (hence the “GPT”). The simplest way to understand how it works is to think it is like a more complex version of the predictive text completion you have had in your cellphone for a couple of decades already. It feeds on a large amount of data, and it tries to predict, using statistics, what would be the most probable answer for the question (or “prompt” nowadays) you gave to it.

So, yeah, you can keep feeding it curated information (like OpenAI, Google and others are doing right now), or open it to the Internet so it can gobble data at will. But no, you won’t wake up one morning to be surprised by it greeting you with a “Good morning, Dave”, and proceeding to dump you into the cold space. It doesn’t lead to that. It will not turn into a General AI just by expanding its model.

It can be said that ChatGPT and the rest of GPTs are just another iteration of Narrow AI. To achieve a real General AI we still need many breakthroughs. Algorithmic, computational, etc. But it doesn’t mean we cannot reap the benefits that Narrow AI like the Generative ones we have right now can provide. And while at it, we can also prepare our society for when General AI arrives, and ideally learn how to craft it so it follows a human-centric approach.

Critical Software Systems are carefully engineered. Your ChatGPT bot isn’t

So, we are currently using Narrow AI in many places already. Sometimes critical places. And we have had software running life-and-death stuff for us even without the AI tag on it (google for “Real-time Systems” and you’ll find tons of examples). What makes ChatGPT and its siblings different?

Realtime systems and Narrow AI enhanced systems have been carefully and purposely designed to apply those AI techniques in situations where they can outperform humans. And thoroughly tested. Again and again. And we still have had some serious incidents. Some of them deadly (like the aircraft control one mentioned earlier).

ChatGPT created a level of hype and awareness unheard of in the Consumer AI field. Suddenly everyone was toying with AI. With emphasis in the word “toy”. It wasn’t long before some lawyer attempted to create an argument using ChatGPT, students tried to make it write their essays, etc.

But there is a problem. A big one. Again, Narrow AI doesn’t think. Some people might have the impression it does, because of the articulate level of its responses, but as I already said, it is just a glorified predictive dictionary (and yes, even the AI image creators work that way).

Narrow AI doesn’t “understand the world”. So if it is fed with information that says that the pigs fly, it is going to write a very nice essay about how pigs can fly. Perhaps even a sonnet. You can fool it very easily. But the worst thing is it can fool itself even easier.

We call that “hallucinations”. It happens when a GPT-based AI reaches the limit of its knowledge, and would require the human ability to understand context to provide a careful response. But it doesn’t. Somewhere, someone fed it with a document that said pigs fly and stated pigs aerodynamics, speeds at sea level, etc. And when prompted to write an essay about flying animals, the pigs will be included, with all the detailed data, and even citations. Scary, huh?

But it is even worse. It doesn’t need a malicious agent to feed it with false pig flight capabilities information. It can write nonsense all by itself, even when fed with proper data. Why? Because it is a statistical machine. It is not “redacting” an answer. It is just putting together letters… then words… then paragraphs, that are statistically probable to be an answer, based on the tons of info it has been fed. But if for some reason it ends up being nonsense, its inability to understand that up is up, and why (and when up might not be up) leads it to happily spout a ludicrous answer without hesitation.

So now, instead of having a carefully designed and tested AI system that outperforms humans in very controlled scenarios, we have millions of people toying with AI in an uncontrolled way, in some cases irresponsibly for professional purposes. We are endangering ourselves by our lack of understanding of its real capabilities.

Perhaps you are already aware of those lawyers that presented a ChatGPT-generated argument, which included hallucinated citations of court cases that didn’t exist, and led to the banning of AI usage in court by a Texas judge4.

As usual, it is not the technology per se what causes the damage, but its misuse. And as Murphy Law states: “Anything that can go wrong will go wrong”. So it went. And will keep going, unless we, as a society, manage to place proper guardrails on the way AI works and its usage.

Neural Network-based AI is hard to explain (or even impossible)

The hallucinations and human misuse of AI are impossible to solve? Theoretically, no. Lots of research is being made on how to try to explain the answers given by Generative AI, for many reasons.

To begin with, it is important to understand how it reaches an answer, so if the answer is wrong, it is possible to find a way to fix either the neural network itself, the data, the underlying algorithms, etc.

Also it is important to be able to explain the answer for accountability purposes: if we are going to use AI-generated content as a basis for our own, we need to be able to explain how it reached that conclusion if we are to be asked about it later (not to “blame it”, because we are still responsible of whatever we produced with it, of course).

But let’s address first the elephant in the room. Why is it hard (or impossible) to explain a Neural Network based AI (like the GPT ones)?

First we need to explain a bit about how Neural Networks work, so you can get an idea of why (don’t worry, I’ll try to make it as simple as possible).

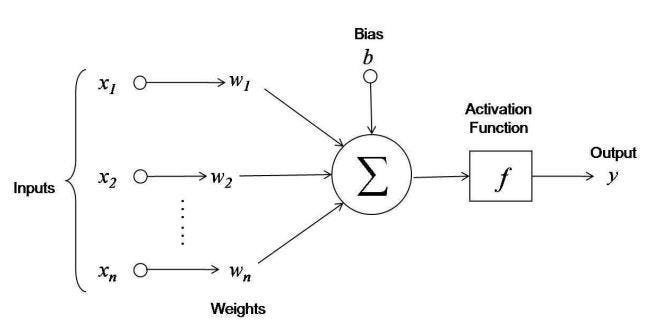

This is a “neuron” from an Artificial Neural Network (yes, it looks a lot like a biological neuron because it is inspired on its structure and behavior).

And this is a biological neuron. As you can see, there is quite some resemblance. And it is not by chance. Just as it happens with biological neurons, the artificial one receives inputs from the neurons on the left (schematically, of course).

The Artificial Neuron takes each of the inputs (they can be in the hundreds, thousands or more), multiply each one by its corresponding “weight” (marked with a “w” on each input), sums it all, and adds a value called “bias” (no need to go in detail about this).

Then it applies something called the “Activation Function”, that changes the value so it can be sent into the next Artificial Neuron, and then the result is sent towards the right (the output).

Yes. That’s it. This simple concept is what is behind all of this Generative AI “miracle”.

But, you are probably asking yourself: OK, Alfredo, don’t fool me. Is this thing capable of learning, writing essays, and getting people into trouble?

Well… yes. Let’s explain how it learns.

When you train an Artificial Neuron, you already know the answer you are looking for. Of course you do. You cannot train someone (or something) if you don’t know the answers beforehand, right?

So your Artificial Neuron starts with a specific Activation Function and a fixed bias (for our purposes, those won’t change during the training process). And also starts with a set of weights (the w’s tied to the inputs).

You run a set of inputs from left to right, and after the neuron calculation is done (inputs multiplied by weights, summed up plus the bias and then the activation function applied) and get the output.

Then you compare the output to the “desired output” (the answer you already know). And if it isn’t the answer you expect, then you proceed to change the weights (the w’s) and try again. Again and again until you get the answer you want.

Now you know which set of weights produce the answer required. That neuron is “trained”.

But you don’t really expect this lone artificial neuron to be able to produce that fancy AI-generated image you saw on X.com (formerly Twitter).

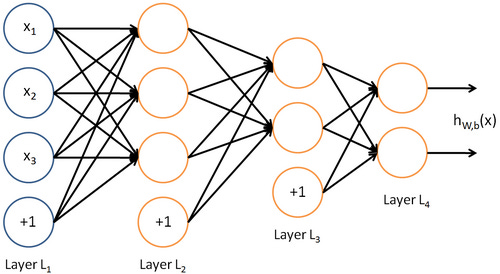

You are right. This is more like the real thing. Neurons are not alone. As in our nervous system, there are A LOT of them. An AI Neural Network can have thousands, tens of thousands or hundreds of thousands, etc.

And each neuron is connected to the next “layer” of neurons. So each line in that diagram is a weight (in fancy GPT terms they are called “parameters”, along the biases). The more layers, the “deeper” the neural network. Now you know why some of these techniques are called “Deep Learning” (because of their “deep neural networks”).

Probably you have heard that the latest ChatGPT, that uses the GPT-4 model, has around 1.7 trillions of parameters. Yep. You read right. 1.7 trillions of weights and biases (those lines in the diagram). And each one of those needs to be trained using the process depicted before.

Now you know why it takes so long to train a Neural Network, and why uses so much processor power (expensive, expensive, expensive), so only behemoths like Microsoft (through OpenAI), Google, Facebook, etc. can train really large models (you have also probably heard the term LLM, that stands for Large Language Models).

So, how do we explain that funky answer my AI neural network produced because of my last prompt? To be 100% precise we would have to backtrack from the answer through each of the parameters to the neurons that participated in the construction of such answer, until we had the complete map of the neurons that fired in sequence to produce it.

As you can probably guess, with 1.7 trillions of parameters this can be near impossible to do. And we are assuming no new “learning” is done between the answer and the explanation attempt. Many techniques that try to reduce the large model to a smaller one (a “local model”) and many others exist.

But, without the need of going into complicated details, by now you can probably assess that we are talking about storing huge amounts of data that will support later attempts of explanation (expensive), processing overhead to calculate and store that data for when it is needed (expensive, expensive), and a lot of extra complexity in case we need to retrain our model, or expand it (expensive x 1000).

And all of this is assuming that the explanation model used is reliable enough for the type of neural network we are trying to explain. Which might not be true. There is a balance between feasibility and trustworthiness of the explanation model, if you are using reductions and simplifications.

So, explaining a Neural Network based AI (like a GPT) isn’t trivial, isn’t easy and isn’t cheap. It might not even be possible. That’s why for so long Neural Networks, once trained, have been considered “black boxes”.

A Trustworthy Generative Artificial Intelligence

The question that immediately arises is: How do we define a Generative Artificial Intelligence that is Trustworthy, so we can make sure we are building them that way? An Artificial Intelligence that we can trust our wellbeing and life on, as we have done before with other software systems.

I’ll try to answer those questions by aligning with the European Union’s concept of “Trustworthy AI”. The EU is probably the current leader in the attempts to create regulations to ensure the development of AI following an ethical path, centered on the human being as its beneficiary.

But this topic is just too big to cover it into a single post, so I’ll split it into three parts, following the EU High Level Expert Group on Artificial Intelligence Report5:

Foundations of Trustworthy AI

Realisation of Trustworthy AI

Assessment of Trustworthy AI

Stay tuned, as I’ll be posting the second part “Foundations of Trustworthy AI” on my next article!

Thank you for reading my publication, and if you consider it is being of help to you, please share it with your friends and coworkers. I write weekly about Technology, Business and Customer Experience, which brings me lately to write a lot also about Artificial Intelligence, because it is permeating everything. Don’t hesitate in subscribing for free to this publication, so you can keep informed on this topic and all the related things I publish here.

As usual, any comments and suggestions you may have, please leave them in the comments area. Let’s start a nice discussion!

Cheers.

When you are ready, here is how I can help:

“Ready yourself for the Future” - Check my FREE instructional video (if you haven’t already)

If you think Artificial Intelligence, Cryptocurrencies, Robotics, etc. will cause businesses go belly up in the next few years… you are right.

Read my FREE guide “7 New Technologies that can wreck your Business like Netflix wrecked Blockbuster” and learn which technologies you must be prepared to adopt, or risk joining Blockbuster, Kodak and the horse carriage into the Ancient History books.

References

Deadly Boeing crashes raise questions about airplane automation https://www.theverge.com/2019/3/15/18267365/boeing-737-max-8-crash-autopilot-automation

Artificial Intelligence for Customer Experience

https://alfredozorrilla.substack.com/p/artificial-intelligence-for-customer-experience

The False Promise of ChatGPT

https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-%20ai.html

Texas judge bans filings solely created by AI after ChatGPT made up cases

Ethics guidelines for trustworthy AI

https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai