It’s 10 am.

The server farm has been working at 100% all night, processing the latest changes into the AI core programming that were suggested by the AI core itself.

You sip your… 8th? 10th? cup of coffee. Who is counting now.

If this experiment works, it will be the last time we, humans, need to to perform upgrades in the AI core directly.

Not that we are doing the programming anymore. For a few years now, the algorithms and data processing have become too complicated for any human to handle by himself.

We “prompt” Narrow AI systems to prepare the updates, according to our new design ideas and creativity, but employing the raw power of the AI models we have so painstakingly trained.

But this time… this time is different. The AI core proposed the new design by itself.

If it proves successful, and we plug the AI core into itself, it will be able to perform its own upgrades.

No humans required. No sleep. No coffee.

Who can really predict how many… weeks? days? hours? may it take to upgrade itself again. And again. And again.

One wonders what will mean to become the 2nd most intelligent species in this planet.

We’ll find out.

In today’s issue:

The Singularity is Near: What is the Singularity and why should we care.

Achieving and Surpassing the Computational Capacity of the Human Brain: The road ahead.

The Impacts and Existential Risks of the Singularity: Should we be worried or excited?

And more…

This week’s useful finds:

Inside Large Language Models: How AI Really Understands Language: An excellent explanation of how your favorite Generative AI tool (ChatGPT, Grok, etc.) works.

The publishing industry is broken, but it doesn’t have to break us: Really insightful discussion on strategies for business growth. It is mainly focused on the publishing industry, but applies for every Small Business model I can think of.

10 ways Blockchain can help Small Businesses: An interesting article on the use of Blockchain technologies for Small Business growth, written by the world leader on Logistics, DHL.

The Singularity

You might have already heard the term Technological Singularity. Or just Singularity.

Or perhaps you haven’t. So we will revisit it here.

What is it? Where it comes from? Is it possible? Are we far from it? Near?

According to Wikipedia1, the Technological Singularity is the moment when our human technology advances so fast, and almost by itself, that it becomes impossible to stop, with unforeseeable consequences.

The first person to use the term was John von Neumann in 1958, one of the fathers of the Digital Computers, and inventor of the von Neumann architecture that is currently used by most processors in the world.

But perhaps the responsible of the wide circulation of the term is Ray Kurzweil, author of “The Singularity is Near2”.

“What, then, is the Singularity? It's a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed. Although neither utopian nor dystopian, this epoch will transform the concepts that we rely on to give meaning to our lives, from our business models to the cycle of human life, including death itself. Understanding the Singularity will alter our perspective on the significance of our past and the ramifications for our future. To truly understand it inherently changes one's view of life in general and one's own particular life.”

— Ray Kurzweil

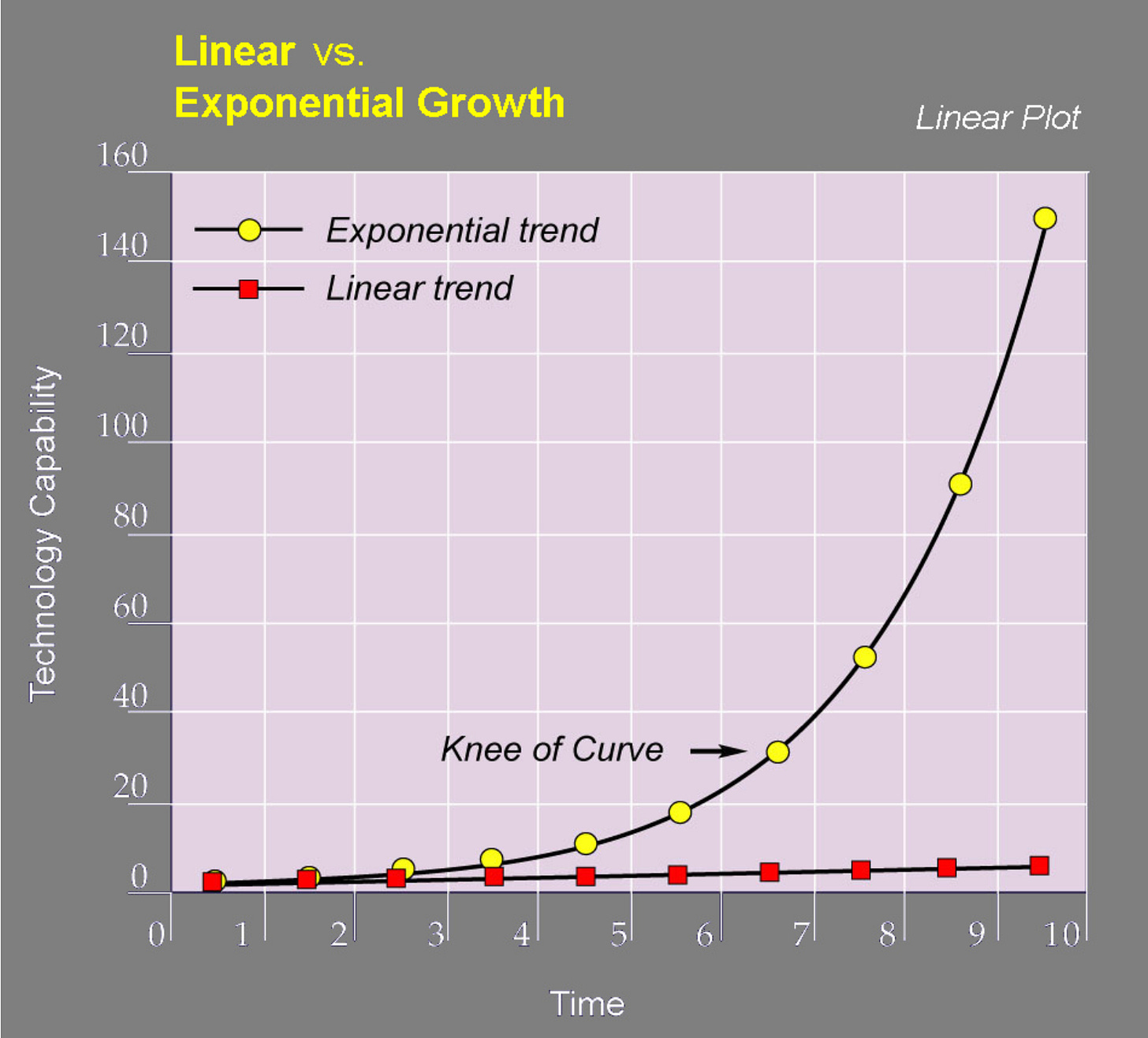

Linear vs Exponential Development

If I tell you that by 2045 we might have already reached the Singularity, with an Artificial Intelligence more capable than the Human Intelligence, you’ll probably say it is too soon.

But, for all we know, it is very probable we will achieve it by then.

The reason why it is hard for us to grasp how close we are is because of the linearity of the human mind thinking.

We perceive time linearly, and we tend to use the past as a predictor for future events.

And it works pretty well when the events happen in a linear progression.

But technology advances exponentially.

So, if we use the same linear mindset to try to predict its future, we will fall short.

Greatly.

As an example, look at the previous figure, where we pit together a linear and an exponential curve.

Notice how at the beginning they move almost together, but at some point the exponential curve starts to move away at an ever accelerating pace.

Just think what we have achieved during the last 10 years in telecommunications, health, electronics, materials, etc.

And how much time it took to get a similar level of advancement during the 20 previous years.

The pace is accelerating, and soon we will see advancements in 5 years that previously might have taken centuries.

That’s the power of the Exponential Development, and we are right inside of it.

The Six Epochs

The pace of development is getting faster every day, but to get where we are now we had to go through a lengthy and slow process.

Kurzweil proposes six epochs for understanding where we come from and where we are going:

Epoch One: Physics and Chemistry

From the Big Bang and the beginning of the Universe, we have the lengthiest Epoch, where matter, atoms and molecules were formed into what we have now.

Epoch Two: Biology and DNA

Then, at some point, life appears.

Probably one of the most amazing developments was the appearance of DNA, which allows to store enormous amounts of information, enough to describe and build life itself.

Epoch Three: Brains

Soon, living beings begun evolving central nervous systems and brains.

This gave place to the appearance of Intelligence, and soon, Human Intelligence.

Epoch Four: Technology

We started creating a culture around us, and with it, Human Technology.

From fire, the wheel, and agriculture, all the way to our latest developments in Artificial Intelligence and Nanotechnology.

This period has been the shortest so far, but the next ones promise to be even shorter.

Epoch Five: The Merger of Human Technology with Human Intelligence

We are already on the rollercoaster towards the unification of our technology with our intelligence.

Every day we depend more and more from the tools we build: food from farms, vehicles, energy production, computers, telecommunications and more.

We already know the concept of “wearable technology”.

Our smartphones makes us one with the Internet, even if it happens rather inefficiently (by typing, talking, reading and watching).

Soon we will start interfacing directly with our technology, so the relationship becomes more efficient.

We can already see the results of these efforts, through companies like Elon Musk’s Neuralink3.

But our truly defining moment will be when we finally achieve the old dream of building the Artificial General Intelligence.

Right now we can already consider ourselves a Human/Technology civilization.

Under no circumstances we could have achieved what we have now without the use of previously developed tools and technology.

And we continue to do so.

But once we have Artificial Intelligence comparable (or superior) to the Human Intelligence, we will start collaborating with our technology, not only using it.

And the Artificial Intelligence will start developing its own tools, and developing itself, well past the human biological limitations.

The Singularity.

Epoch Six: The Universe Wakes Up

This will mark the true expansion of Humanity into the cosmos.

Humanity and its technology will become one, and our resources and energy requirements will skyrocket.

Following Kardashev’s scale4, we will need first to control all of the energy that reaches the Earth from the Sun, then become able to utilize all the energy produced by the Sun itself, and finally all the energy produced by our galaxy.

Achieving and Surpassing the Computational Capacity of the Human Brain

But to reach the Singularity, we still need to tackle several problems and develop some technologies and new paradigms.

One of them is dealing with the computational capacity to support an Artificial General Intelligence.

Human brains and current computers work in very different ways, and have very different advantages and disadvantages.

Our brains are a huge neural network capable of incredible pattern matching and recognition, but slow for some computational processes and of limited memory.

Current computers are extremely fast, but work sequentially and we are still trying to build proper neural networks with them.

Nevertheless, computers can communicate with each other at much faster speeds than our brains, retain information indefinitely, and once one learns how to do something, it can be transferred immediately to others.

So we need to develop computing techniques capable of harnessing the best of both worlds, while avoiding the pitfalls.

Reverse Engineering the Brain

Enormous amounts of resources have been devoted to build the current Artificial Intelligence attempts.

Those can be classified as Narrow Artificial Intelligence, because they are focused on specific tasks and incapable of general reasoning (check my previous article5 where I explain this in the context of Customer Experience AI).

But the real effort to get an Artificial General Intelligence (AGI) is still happening.

We need yet to understand how the brain works, not only at the biological level (think of it as hardware) but also at the reasoning and processing level (what would be the software).

This will shed some light on what are we trying to replicate, even if we don’t build it exactly in the same way it works in Nature.

In the meantime, all those specialized Artificial Intelligences built on top of Nvidia processors, soaking up enormous amounts of energy, will become tools that will help us achieve the Real Artificial Intelligence.

The Impacts

The exponential development of our Technology is caused by the interaction of many science disciplines helping each other in a virtuous cycle (or spiral).

Our newest attempts in Narrow Artificial Intelligence (ChatGPT, Grok, Claude, etc.) are already helping scientists to innovate in their own fields.

Better and faster biology research (proteins and amino acids) result in better treatments and health, making us live longer.

Narrow AI is helping to create better materials (nanotechnology) and design better processors.

Those enhanced processors will help AI to run better, and help us build better robotics and hardware to help us everyday.

So, we have Artificial Intelligence, Nanotechnology, Robotics, Genetics, etc. helping each other in an ever increasing virtuous spiral that will lead us into the Singularity.

We will experience in the next few decades the impacts of this vertiginous rate of development.

It will change the Human Body and the Human Brain, to make them more efficient and healthy, even before the Singularity itself.

Our longevity will be greatly increased by those developments, making us more productive as a species.

Our productivity will also benefit from better ways of learning (remember Neo, from The Matrix, and his famous “I know kung fu”), and our way of working.

But we will also be impacted by new risks, like the use of Artificial Intelligence for warfare (like Lethal Autonomous Weapon Systems).

Existential Risks

When we talk about “Existential Risks”, we are not just talking about some events or their consequences that can cause a finite or manageable amount of damage.

We are talking about World Ending events. All Life Ending events.

And with Artificial Intelligence, Genetics and Nanotechnology, those leave the realm of dystopia science fiction, and become a very possible outcome.

As technology and science advance, those risks appear around us even more frequently than we care to imagine.

For example, a few years ago, the CERN Large Hadron Collider (LHC) performed an experiment that some people believed could create a micro Black Hole.

Of course, this was an exaggeration, because even if the black hole were created, it would be so minuscule that no danger could come from it.6

But it shows how we are starting to dabble into matters that could easily mean destruction at the cosmological level.

Think of the first nuclear bomb. Before that, it would have been unthinkable that we, as a species, would be able to end all life on Earth.

Now we are very capable of such destruction.

Genetic manipulation of viruses, fungi and bacteria can create strains so lethal that would wipe most of the human population.

But it is a well known fact that a disease can hardly wipe an entire species, because of the high level of variability in our immune systems and genetics.

So, at least part of the human species would survive.

Now think about a nanotechnological product gone wrong.

One that was designed to disassemble certain types of waste or materials, and with the ability to replicate itself as needed to cope with larger amounts of required work.

An original programming defect, or a defect introduced due to faulty replication, makes it start disassembling everything around: living and inert.

This is what Ray Kurzweil aptly named “The Grey Goo Scenario”.

Everything on Earth would become that grey goo byproduct of the faulty nanotech process gone rogue.

It could wipe all life in as little as two weeks, and we would be completely unable to do anything.

Our immune systems have not evolved to deal with something like this.

And let’s not get started with the “Terminator” scenario, where a superior Artificial Intelligence, coupled with advanced Robotics decide to get rid of the human species.

So, what can we do as a species to annihilate ourselves while trying to reach higher levels of technology?

Defenses

We can plan and build defenses for dealing with those Existential Risks before deploying the technologies that could trigger the undesired events.

Like bolstering our immune systems through more powerful vaccines and gene therapies, capable of dealing with unknown biological threats.

When dealing with the Grey Goo, we could create an artificial immune system, integrated not only to our human bodies, but to the whole environment.

Only defensive nanotechnology would be able to stop a rogue or faulty nanotechnology from turning everything into ashes in a matter of days.

And we are already trying to infuse Ethics and Human Morality into our early attempts at Artificial Intelligence, with varied results.

As our understanding of the Human Thought Process, and Human Intelligence increases, we will be able to try to replicate it using technology.

And, hopefully, we will be able to teach it “how to be good” too.

Relinquishment

Building defenses is not the only way to deal with the Existential Risks.

Just a year ago, during the height of the Generative Artificial Intelligence hype, a group of renowned Computer Scientists, Artificial Intelligence Experts were terribly worried on the perceived recklessness of our AI Gold Rush.

They signed an open letter named: “Pause Giant AI Experiments: An Open Letter”.7

The idea was to convince the major AI players to stop their developments until some guard rails could be agreed and established.

The fear of inadvertently giving birth to the first Artificial General Intelligence, that would be capable of enhancing itself and surpassing humans in weeks or perhaps days, was the main driver.

An all powerful Artificial General Intelligence without any regards of human values, ethics and moral could very well mean the end of the human species too.

So, Relinquishment is another option for dealing with technologies that could pose Existential Risks.

Temporary or permanent relinquishment.

Unfortunately, this option entails the danger of rogue agents (private or governments) ignoring the relinquishment accords, and developing the forbidden tech underground.

And ultimately this would be even more dangerous, because without “the good guys” trying to develop defenses, any mishap would end in disaster.

Regulations

And, finally, we could try to create strong regulations to disincentivize the technology players from following paths that can become harmful in the long run.

The European Union is following this idea, as you can read in my article “Prohibited Artificial Intelligence Practices in the European Union”.8

But this approach also has important drawbacks.

While the European Union establishes stiff regulations to the research and development of AI, other economic blocs, like China and the US don’t.

This entices researchers to migrate to those territories where their work isn’t entangled by red tape, effectively voiding the intent of the regulations in the first place.

So, unless there is a general agreement on the regulations applied to technologies that pose Existential Risks, the enforcement of those won’t solve the issue.

Conclusion

I hope this summary and discussion about what is the Technological Singularity helped you understand the exciting, albeit dangerous times we are approaching.

From now on, the only thing we can be sure is Change.

And that such Change will happen at an exponential rate, so we must be capable of adapting swiftly to the new environment, both at the personal and business level.

But for those of us who have acquired the ability to turn those threats into opportunities, and absorbing the new technologies as they come, these will be times of success without precedent.

Welcome to the New World.

Thank you for reading my publication, and if you consider it helpful or inspiring, please share it with your friends and coworkers. I write weekly about Technology, Business and Customer Experience, which brings me lately to write a lot also about Artificial Intelligence, because it is permeating everything. Don’t hesitate in subscribing for free to this publication, so you can keep informed on this topic and all the related things I publish here.

As usual, any comments and suggestions you may have, please leave them in the comments area. Let’s start a nice discussion!

When you are ready, here is how I can help:

“Ready yourself for the Future” - Check my FREE instructional video (if you haven’t already)

If you think Artificial Intelligence, Cryptocurrencies, Robotics, etc. will cause businesses go belly up in the next few years… you are right.

Read my FREE guide “7 New Technologies that can wreck your Business like Netflix wrecked Blockbuster” and learn which technologies you must be prepared to adopt, or risk joining Blockbuster, Kodak and the horse carriage into the Ancient History books.

References

Technological singularity. (2024, October 30).

In Wikipedia. https://en.wikipedia.org/wiki/Technological_singularity

Kurzweil, R. (2005). The Singularity is Near. Penguin Books.

Neuralink Corp. (2024, November 04). “Neuralink — Pioneering Brain Computer Interfaces”.

Kardashev scale. (2024, November 04).

In Wikipedia. https://en.wikipedia.org/wiki/Kardashev_scale

Zorrilla, A. (2023, April 10). Artificial Intelligence for Customer Experience. The Entrepreneuring AI.

https://alfredozorrilla.com/p/artificial-intelligence-for-customer-experience

CERN Website. (2024, November 20). “Will CERN generate a black hole?”.

https://home.cern/resources/faqs/will-cern-generate-black-hole

Future of Life Institute. (2023, March 22). “Pause Giant AI Experiments: An Open Letter”.

https://futureoflife.org/open-letter/pause-giant-ai-experiments/

Zorrilla. A. (2024, April 29). Prohibited Artificial Intelligence Practices in the European Union. The Entrepreneuring AI.

https://alfredozorrilla.com/p/prohibited-artificial-intelligence-practices-eu