Yes. Deckard had to find out if she was a Replicant… and fast. One of the dangerous Nexus-6 android models that escaped, to be precise.

Replicants were physically and intellectually indistinguishable from common humans. A physician could examine them, take blood tests, and wouldn’t find a difference.

You could ask them questions about their past, their families, etc. and they would have answers as vivid and emotional as anyone’s.

But they weren’t capable of showing empathy. And that’s where the Voight-Kampff Test came in.

In today’s post:

Where is AI taking us?: The advent of (narrow) AI is changing the way we learn, the way we work, etc.

Where is AI taking us?

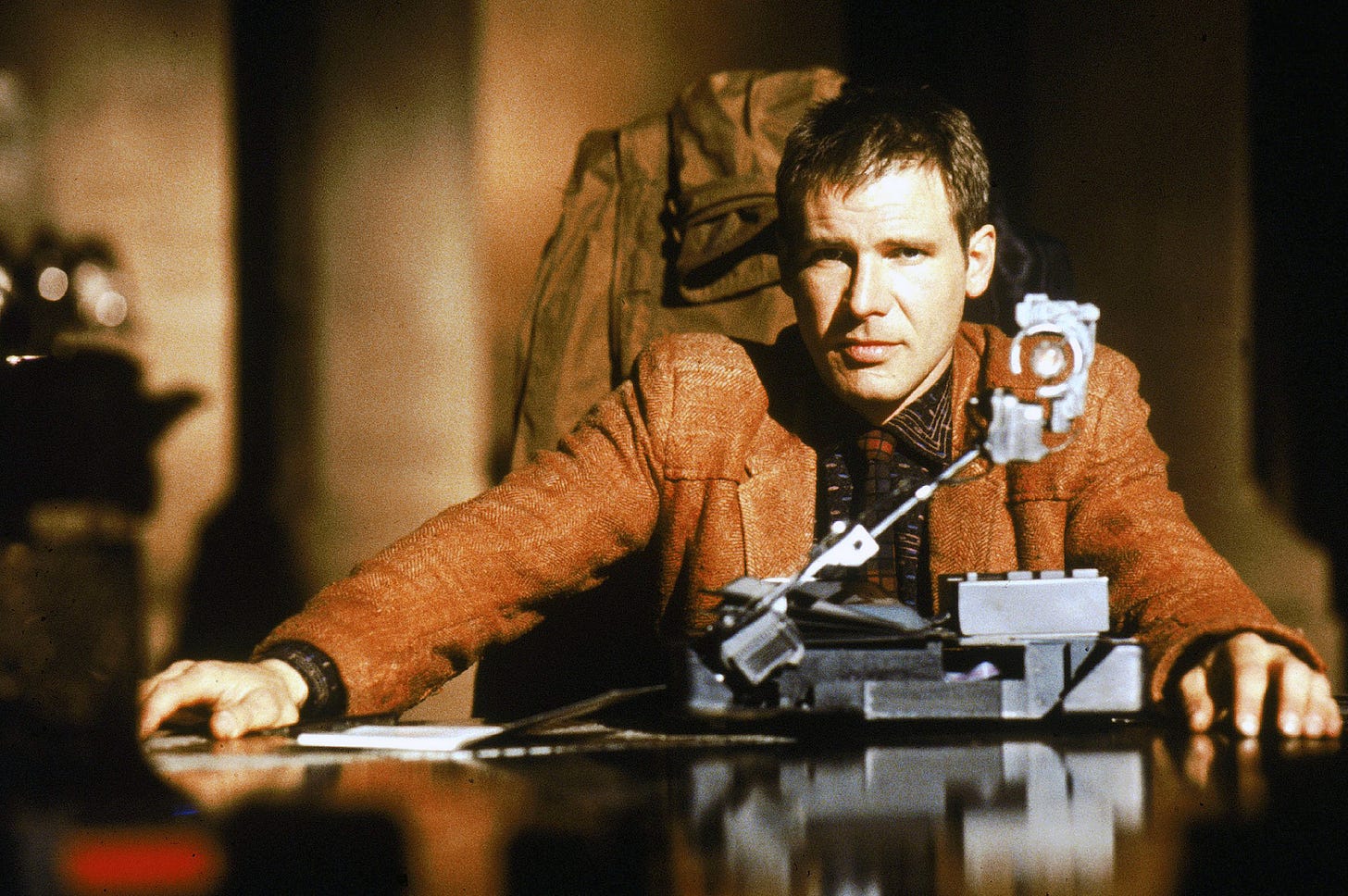

Blade Runner came out in 1982. It revolves around Rick Deckard, a former police officer hunting down “replicants”: androids with advanced Artificial Intelligence.

Almost indistinguishable from humans, and created to be used in dangerous jobs and environments where humans didn’t want to go because of the risks, it’s only logical that periodically some of them attempted to escape.

And the interesting part is the “almost”. They were physically identical to humans. Also intellectually identical. You couldn’t screen a replicant from a human by knowledge tests nor physical ones.

But they lacked empathy. So, a psychological-technological test named the Voight-Kampff Test was devised.

AI all around us

The current AI we have isn’t, by far, something we can compare to Blade Runner’s AI (embedded in the Replicants).

I have written a bit about this in my post “Artificial Intelligence for Customer Experience”1. The current AI trend is what we call “Narrow AI”: capable of performing specific tasks better and faster than humans, but unable to generalize.

What we call AGI: Artificial General Intelligence, is still years away.

But what we have now is already having a profound impact in many things we do. And not necessarily for the better.

A few examples:

Human Resources specialists start using AI to sift and classify candidates resumes. Then candidates start using Generative AI to apply en masse to job opportunities.

Students start using Generative AI to cheat in their homework. Teachers start using AI prompts hidden as white colored text in the questions to force the AI to, covertly, show itself in the answers.

Big law firms start using Generative AI to perform some of the tasks recent graduates did. Then they start rescinding job offers to many prospective candidates they would have usually hired.

etc.

And some industries are already scared of the potential impact, and trying to prepare:

The script writers are already fighting against the use of their copyrighted content to train AI systems that might start replacing them in the future.

The amount of freelance gigs in platforms like Upwork, Fiverr, etc. related to copywriting and graphic design has dropped substantially.

New AI tools like Sora promise to create short videos that previously required many specialists.

Will it come the time when we will have to publicize the fact that something has been created by a human vs having been created by an AI?

Will we ever need a Voight-Kampff Test?

It is funny to think about it, but with each passing day it becomes harder to detect content created by AI.

We are already using AI systems to detect AI-generated content, because we would be unable to do it by ourselves.

At the same time, we already use AI models to create data that serves to train other AI models (synthetic data).

When will “AI-generated” stop being a derogative term, and become something desirable and marketable?

What are we doing, as individual persons and organizations to prepare for that moment?

As I explain in my article “How NOT to apply Artificial Intelligence in your Business”2, using AI for Customer Service isn’t necessarily a good idea in every case.

For now.

As the Replicants, current AI chatbots lack empathy (and many other human intelligence traits).

But, what if they get good enough to make us think they have? Will they replace overnight millions of Customer Service Representatives?

What shields us from being covertly served by AI systems instead of human individuals?

Some government bodies are already trying to regulate such things, as I discuss in “Prohibited Artificial Intelligence Practices in the European Union”3.

We are just at the beginning of it.

The Road Ahead

What do you think about this? Do you have a personal and organizational plan for the unavoidable changes that we are going to be part of?

Let me know in the comments!

When you are ready, here is how I can help:

“Ready yourself for the Future” - Check my FREE instructional video (if you haven’t already)

If you think Artificial Intelligence, Cryptocurrencies, Robotics, etc. will cause businesses go belly up in the next few years… you are right.

Read my FREE guide “7 New Technologies that can wreck your Business like Netflix wrecked Blockbuster” and learn which technologies you must be prepared to adopt, or risk joining Blockbuster, Kodak and the horse carriage into the Ancient History books.

References

Artificial Intelligence for Customer Experience

https://alfredozorrilla.substack.com/p/artificial-intelligence-for-customer-experience

How NOT to apply Artificial Intelligence in your Business

https://alfredozorrilla.substack.com/p/how-not-to-apply-artificial-intelligence

Prohibited Artificial Intelligence Practices in the European Union

https://alfredozorrilla.substack.com/p/prohibited-artificial-intelligence-practices-eu